miércoles, 22 de octubre de 2014

Sistema Scada

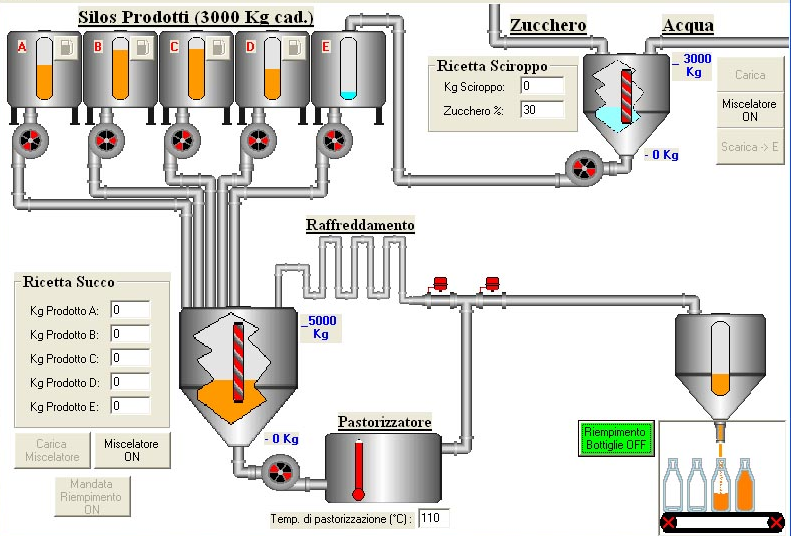

DEFINITION OF SCADA SYSTEM.

SCADA STANDS FOR SUPERVISORY CONTROL AND DATA ACQUISITION (SUPERVISORY

CONTROL AND DATA ACQUISITION).

A SCADA IS A COMPUTER-BASED MONITOR AND CONTROL THAT ENABLES REMOTE

INSTALLATION OF ANY SYSTEM. UNLIKE DISTRIBUTED CONTROL SYSTEMS, THE CONTROL

LOOP IS CLOSED BY THE OPERATOR GENERALLY. DISTRIBUTED CONTROL SYSTEMS ARE

CHARACTERIZED BY PERFORMING CONTROL ACTIONS AUTOMATICALLY. TODAY IT IS EASY TO

FIND A SCADA SYSTEM PERFORMING TASKS AUTOMATIC CONTROL IN ANY LEVEL, BUT THEIR

MAIN TASK IS MONITORING AND CONTROL BY THE OPERATOR. TABLE NO. 1 SHOWS A

COMPARISON OF THE MAIN FEATURES OF THE SCADA SYSTEMS AND DISTRIBUTED CONTROL

SYSTEMS (DCS) IS SHOWN (THESE FEATURES ARE NOT LIMITING TO ONE TYPE OR SYSTEMS

ARE TYPICAL).

THE FLOW OF INFORMATION ON SCADA SYSTEMS IS AS FOLLOWS: THE PHYSICAL

PHENOMENON WHAT IS THE VARIABLE THAT WE WANT TO MEASURE. DEPENDING ON THE

PROCESS, THE NATURE OF THE PHENOMENON IS VERY DIVERSE: PRESSURE, TEMPERATURE,

FLOW, POWER, CURRENT, VOLTAGE, PH, DENSITY, ETC. THIS PHENOMENON SHOULD LEAD TO

A VARIABLE THAT IS INTELLIGIBLE TO THE SCADA SYSTEM, IE, AN ELECTRICAL

VARIABLE. TO THIS END, SENSORS OR TRANSDUCERS ARE USED.

SENSORS TRANSDUCERS OR CONVERTING VARIATIONS OF THE PHYSICAL PHENOMENON

IN PROPORTIONAL VARIATIONS OF AN ELECTRICAL VARIABLE. THE ELECTRICAL VARIABLES

MOST COMMONLY USED ARE: VOLTAGE, CURRENT, CHARGE, RESISTANCE OR

CAPACITANCE.

HOWEVER, THESE VARIOUS TYPES OF ELECTRIC SIGNALS MUST BE PROCESSED TO BE

UNDERSTOOD BY THE DIGITAL COMPUTER. THIS USED SIGNAL CONDITIONERS, WHOSE

FUNCTION IS TO REFERENCE THESE ELECTRICAL CHANGES ON THE SAME SCALE CURRENT OR

VOLTAGE. IT ALSO PROVIDES ELECTRICAL ISOLATION AND FILTERING OF THE SIGNAL IN

ORDER TO PROTECT THE SYSTEM FROM TRANSIENTS AND NOISE ARISING IN THE

FIELD.

ONCE FITTED THE SIGNAL, IT IS CONVERTED INTO AN EQUIVALENT DIGITAL VALUE

IN THE BLOCK DATA CONVERSION. GENERALLY, THIS FUNCTION IS PERFORMED BY A

CIRCUIT FOR ANALOGUE / DIGITAL CONVERSION. THE COMPUTER STORES THIS

INFORMATION, WHICH IS USED FOR ANALYSIS AND DECISION MAKING. SIMULTANEOUSLY,

THE INFORMATION IS DISPLAYED TO THE USER OF THE SYSTEM IN REAL TIME.

BASED ON THE INFORMATION, THE OPERATOR CAN MAKE THE DECISION TO PERFORM

A CONTROL ACTION ON THE PROCESS. THE OPERATOR COMMANDS THE COMPUTER TO DO IT,

AND AGAIN MUST BECOME DIGITAL INFORMATION INTO AN ELECTRICAL SIGNAL. THIS

ELECTRICAL SIGNAL IS PROCESSED BY AN OUTPUT CONTROL, WHICH FUNCTIONS AS A

SIGNAL CONDITIONER, WHICH SCALE TO MANAGE A GIVEN DEVICE: A RELAY COIL,

SETPOINT OF A CONTROLLER, ETC.

NEED A SCADA SYSTEM.

TO ASSESS WHETHER A SCADA SYSTEM IS NEEDED TO HANDLE A GIVEN

INSTALLATION, THE PROCESS CONTROL MUST MEET THE FOLLOWING

CHARACTERISTICS:

A) THE NUMBER OF PROCESS VARIABLES THAT NEED TO BE MONITORED IS

HIGHER.

B) THE PROCESS IS GEOGRAPHICALLY DISTRIBUTED. THIS CONDITION IS NOT

RESTRICTIVE, SINCE IT CAN INSTALL A SCADA FOR MONITORING AND CONTROLLING A

PROCESS CONCENTRATED IN ONE LOCATION. C) THE REQUIRED PROCESS INFORMATION AT

THE TIME THAT THE CHANGES IN THE SAME, OR IN OTHER WORDS, THE INFORMATION IS REQUIRED

IN REAL TIME.

D) THE NEED TO OPTIMIZE AND FACILITATE PLANT OPERATIONS AND DECISION

MAKING, BOTH MANAGERIAL AND OPERATIONAL.

E) THE PROFITS IN THE PROCESS JUSTIFY THE INVESTMENT IN A SCADA SYSTEM.

THESE BENEFITS MAY BE REFLECTED AS INCREASED EFFECTIVENESS OF PRODUCTION,

SAFETY STANDARDS, ETC.

F) THE COMPLEXITY AND SPEED OF THE PROCESS ALLOWS MOST OF THE CONTROL

ACTIONS ARE INITIATED BY AN OPERATOR. OTHERWISE, IT WILL REQUIRE AN AUTOMATIC

CONTROL SYSTEM, WHICH IT MAY CONSTITUTE A DISTRIBUTED CONTROL SYSTEM, PLC'S,

CONTROLLERS CLOSED-LOOP OR A COMBINATION THEREOF.

FUNCTIONS.

AMONG THE BASIC FUNCTIONS PERFORMED BY A SCADA SYSTEM ARE THE

FOLLOWING:

A) COLLECT, STORE AND DISPLAY INFORMATION CONTINUOUSLY AND RELIABLY,

LEDS LIGHT FIELD: DEVICE STATUS, MEASUREMENTS, ALARMS, ETC.

B) IMPLEMENT CONTROL MEASURES INITIATED BY THE OPERATOR, SUCH AS OPENING

OR CLOSING VALVES, STARTING OR STOPPING PUMPS, ETC.

C) ALERT THE OPERATOR CHANGES DETECTED IN THE PLANT, BOTH THOSE THAT ARE

NOT CONSIDERED NORMAL (ALARMS) AND CHANGES THAT OCCUR IN THE DAILY OPERATION OF

THE PLANT (EVENTS). THESE CHANGES ARE STORED IN THE SYSTEM FOR LATER

ANALYSIS.

D) APPLICATIONS IN GENERAL, BASED ON THE INFORMATION OBTAINED BY THE

SYSTEM, SUCH AS REPORTS, TREND GRAPHS, HISTORY VARIABLES, ESTIMATES, FORECASTS,

LEAK DETECTION, ETC.

lunes, 22 de septiembre de 2014

domingo, 21 de septiembre de 2014

History of Pentium processors

The

evolution of Intel processors over the last twenty years has been

truly remarkable. Two decades ago, the processors 486 were the

absolute cutting edge hardware for desktop computers, with double-digit

speed. So Intel introduced its chips Pentium , and

something changed forever in the computer world. A new generation of speed

and optimizations made an appearance, and

although it was not without problems, generated the spark that propelled

forward the development of multiple series of processors, up to the awesome

chips we use these days.

It

was the beginning of the decade of the 90s, or more precisely speaking, the

year 1993 Some of the processors 486 and took four years in the market,

however, was one who could be considered a demigod walking on earth, having

note the "heavy" applications that were then: DOS operating

system, and Windows 3.1

as "environment" . But Intel , which at the

time was facing a much tougher competition from alternatives such as IBM, AMD,

Cyrix and Texas Instruments, decided to launch a new platform, improving this

technology in 486 chips considerably. The designated name

was Pentium, representing the fifth generation of

processors. One reason for the new name was because the patent office

refused to register numbers as property. At that time, processors were

classified as 80386 and 80486 If it had been possible, perhaps what we know as

Pentium today would not have been anything other than 80586, but Intel knew at

the time that it would need more than a flashy name to prevail address existing

platforms. The popularity of the 486 was impressive, to such an extent that Intel recently

stopped making it in 2007, although they were already oriented to embedded

systems with specific objectives.

The

March 22, 1993 witnessed the emergence of the Pentium 60 . Both its

frequency and bus were synchronized at 60 megahertz, and marked the emergence

of a new socket, the socket 4 for a new motherboard was necessary to

receive (there was one exception, that will name later) . Integrated

floating point unit, 64-bit data bus, and massive power consumption (based

on five volts) were some of the features of the new

processor. Al Pentium knew him as original P5 in

technical circle, and had only higher, speed of 66 megahertz. Power

consumption became a real problem: Intel had to keep elevating 5.25V stable at

66 megahertz chip, which also sparked a demon today we can not even beat the

all temperature. Required more efficient design (it was not for

nothing that he was called "heater coffee" to Pentium) , and

that's how Intel came in October 1994, to create the P54C, a revised

version of the Pentium that in addition to lowering 3.3v voltage, also possible

to raise the clock speeds of 75, 90 and 100 megahertz,

respectively. However, there were two very important points that worked

against the adoption of the Pentium: New P54C require a new socket, the socket

5, which was not backwards compatible with the previous

socket. And most importantly, a bug was discovered in the floating point

unit integrated into the design of the Pentium, which was popularized as

the "bug FDIV" .What really caused problems was not the

mistake itself, but the fact that Intel had been conscious of itfive months

before it was reported by Professor Thomas Nicely of Lynchburg College,

while working with processor on the constant Brun.

Furthermore, Intel should

offer an upgrade option for systems with old sockets, and it was created as

the Pentium OverDrive . According to Intel, those users with a

system 486 could place an OverDrive processor and achieve a very similar to the

Pentium processor performance. Unfortunately, the OverDrive design was the

victim of multiple compatibility issues, affecting the final

performance. The alternatives presented by AMD and Cyrix offered

performance, and there were circumstances in which even a 486DX4 could beat an

OverDrive. Nor should we forget the price, because although Intel had

launched the OverDrive for users to avoid changing the whole system, the money

saved in the process should invest on virtually OverDrive itself. The

first OverDrive were available to socket 2, 3, 4, 5, 7 and 8.

In just over three

years, Intel had managed to triple the speed of its Pentium

chips . The P54C paved the way for P54CS that raised the frequencies

133, 150, 166 and 200 megahertz respectively. Between the two lines

appeared P54CQS, represented only by the Pentium 120 From designs 120 megahertz

Pentium chips left behind at FDIV bug, but the front was opened in

two. First, P55C appeared, most known among users as Pentium

MMX . Secondly, Intel released the Pentium Pro in November

1995 to close with the fifth generation, introduced to the market P55C MMX

extension , an additional set of instructions that increased chip

performance in certain multimedia processes. The desktop versions had

speeds of 166, 200 and 233 megahertz, using the socket 7 that existed from the

P54C. The MMX OverDrive processors also had their sockets previous

version, but his low popularity remained unchanged.

The next in line was

the Pentium Pro . since its launch is officially known as a member of

the sixth generation of processors, also called P6, or i686, a term

used even in these days (you can find it in the names of the images of

some Linux distros) . The Pentium Pro quickly ran as a completely

different chip family P5, despite the shared name. He did not have MMX

instructions, but its performance was massive, thanks to increased clock speeds

and designs that had an L2 cache memory to a megabyte.One of the highlights was

the Pentium Pro performance with 32-bit software. At least their speed

exceeded that of the Pentium by 25 percent, but at the same time this was one

of the things that hurt.With excellent performance on 32-bit speed in 16-bit

processes was lower , which gave even younger siblings. As if

that were not enough, a high price (caused by the complexity of the

design) and the need to change socket again (socket 8) , made the Pentium Pro a very unpopular processor among

users, but maintained a presence between high-performance

servers. His models were 150, 166, 180 and 200 megahertz, with variants of

256 KB, 512 KB and 1 MB of L2 cache.

Despite the problems faced with

the Pentium Pro, Intel took what they learned when designing this

chip, creating the basis for what would be the Pentium

II , Pentium III , Celeron and Xeon . The Pentium

II first appeared in May 1997 and had a radical design: The "chip" had

become "cartridge" officially presenting the slot 1 also

introduced the MMX extension, and corrected performance problems in

applications 16 bits that had plagued the Pentium Pro. Their L2 cache was 512

KB, albeit slower, using half the bandwidth. However, the fact that Intel

integrate the cache memory in the cartridge and not allowed inside the core cut

costs enough to make the Pentium II a very attractive option for consumers,

more than ever outside the Pentium Pro. Their first version was known as the

Klamath, which used a bus of 66 megahertz, and had speeds of 233, 266 and 300

megahertz. Less than a year later came the Deschutes family, with models

333, 350, 400, and 450 megahertz. The bus of the Deschutes was raised to

one hundred megahertz (except model 333 which remained at 66) , which

was maintained in subsequent processors. Three months after the onset of

the Deschutes, Intel released the processor Celeron , an economical

and much less powerful version of the Pentium II. Its main weakness was

the absence of L2 cache, which aroused many complaints from the

public. However, both this and the later Celeron with 128 KB of L2 cache

demonstrated unprecedented potential foroverclocking . The Celeron

300A became one of the most coveted processors, because with proper

configuration it could carry an impressive 450 megahertz, a yield that had

little to envy the Pentium II 450, much more expensive. As to Xeon, from

the beginning it was positioned as a server processor. A different from

the Pentium II slot and a larger amount of cache were just two of the many

factors that determined this.

The Pentium III were

clearly defined into three sub-generations. The first was Katmai, which

featured an increase in clock speed, and the incorporation of new SSE

instructions for multimedia acceleration.Models were 450, 500, 533, 550

and 600 megahertz. While Xeon had also acquired the characteristics of the

Pentium III through new versions, eight months later, in October 1999, reached

the Coppermine models. The Coppermine marked the reintroduction of

the sockets from Intel chips, with the arrival of the socket 370 .

many users have crossed on their way to the famous "adapters" socket

370 to slot 1 to use the new chips on motherboards with slot slot 1, and

compatibility issues that could arise with it.The Coppermine were among the

first to offer "regular" users the ability to have a 1 GHz processor,

a barrier that is not expected to reach until several years. Some

additional details are found in the inclusion of 133 megahertz bus, full use of

the bus in the L2 cache and updating the Celeron line, incorporating improvements

in Coppermine. Finally came the Tualatin. By themselves,

these Pentium III were very powerful, as the top models had a 1.4 Ghz

clock, and 512 KB of L2 cache. But they were extremely hard to come by,

not to mention their high cost, and the detail that many motherboards were

inconsistent with these processors due to design limitations in their

chipsets. Who will feature a motherboard support and near Tualatin, had a

monster as a computer.

Up here we come to what may be

regarded as "ancient history" , because now entered the era

of the first Pentium 4 , which for lack of other words, they were too

many to taste and understanding of users. The first corresponded to the

Willamette P4 family, starting with a clock speed of 1.3 Ghz, new socket (423),

and the introduction of SSE2 instructions. Then came the Northwood, the

Gallatin (known as Extreme Edition) , the Prescott, the Prescott 2M,

Cedar Mill and finally All these families had multiple changes. Abandoning the

socket 423 in favor of 478, the introduction of Hyper- Threading ,

buses of 533 megahertz, SSE3 instructions, buses 800 megahertz, virtualization,

socket 775, and most importantly, the arrival of the NetBurst

microarchitecture. The maximum speed of the Pentium 4 3.8 Ghz

reached impressive, but this was more a limitation than an

achievement. Intel could not go beyond this speed without further

increasing the voltage and thermal design, which would also be problematic also

be too expensive. Intel needed to do the simplest things, in several

senses.

- The socket 423 was abandoned in a reasonably short time

The

solution came through something as everyday for us: The multiple

nuclei . Although the first dual-core Pentium appeared in

May 2005 (known as the Pentium D) , yet they can be found running

like the first day. Early attempts to Intel were somewhat coarse

as the Pentium D were found to be very inefficient, especially in the aspect of

temperature. Smithfield and Presler, along with their variations XE, were

what gave shape to this series of Pentium D processors. The NetBurst

architecture needed a replacement, and this came with something that is already

familiar to us all: Processors Core 2 Duo .While Core models passed

to the forefront, representing models of medium and high-end Pentium were

relegated to a much more humble role, identifying processors with what we

consider as "basic skills" . However, that does not

mean that the Pentium name itself is destined to disappear. Today, Intel

maintains active three sockets: The 775, 1156 and 1366 The first is slowly

displaced, while the other two focus all new models of processors, including

the Pentium G6950 , released in January. So do not worry about

the budget if you only reach for this new Pentium. At any time you can

make the leap to a full CPU, but should not fail to remember the name Pentium

has managed to make their mark in the world of computing, with news, attempts,

failures and stunning successes. If it were not for the name Pentium,

Intel probably would not be where it is now .

miércoles, 17 de septiembre de 2014

INTEL EDISON.

Características.

A principios de este año, Intel anuncio la Intel Edison una pequeña computadora que tenia forma de una SD y que viene muy bien con el internet de las cosas y el mundo wearable. Ayer Intel a anunciado una versión que al parecer va a ser el diseño final, ya no tiene forma de SD, pero aun sigue siendo pequeña y toma la arquitectura x86.

Arduino y Raspberry Pi, pueden lucir muy parecidas, incluso es posible que hayamos asumido que este par de plataformas de hardware compiten para resolver problemas similares. En realidad son muy diferentes. Para empezar,Raspberry Pi es una computadora completamente funcional, mientras que Arduino es un microcontrolador, el cual es sólo un componente de una computadora.

Aunque el Arduino puede ser programado con pequeñas aplicaciones como C, este no puede ejecutar todo un sistema operativo y ciertamente no podrá ser el sustituto de tu computadora en un tiempo cercano. Aquí está una guía para diferenciar entre Arduino y Raspberry Pi, y para determinar cuál de los dos dispositivos de hackeo DIY se adapta mejor a tus necesidades como hacedor.

Arduino | Raspberry Pi modelo B |

miércoles, 10 de septiembre de 2014

TELEMATICA

¿WHAT IS TELEMATICS?

Telematics is to use software tools to add intelligence to traditional telecommunications or, rather, to offer computer services at a distance using telecommunication systems such as the Internet.

Telematics is a branch of engineering that arises from time telecommunications systems, such as telephone networks or computers begin to acquire higher intelligence. When a system gains understanding, we say that it is able to perform certain functions that can carry out a human. Technological advances in electronic and computer systems have enabled adding intelligence to ever smaller devices, starting from personal computers or laptops, to smartphones as new equiposmóviles. This, plus the explosive advancement of the global Internet network, has resulted in a growing need for professionals involved in the information technology and communication (ICT).

Suscribirse a:

Entradas (Atom)